MEET AYA

Unlocking AI's potential,

one language at a time

Aya, a global open-science initiative from Cohere Labs, unites researchers to push the frontier of multilingual AI, bridging gaps between people and cultures worldwide.

Aya began as the largest open science collaboration in ML, bringing together a community of researchers from around the world to create a powerful foundation for future innovation. This initial effort laid the groundwork for subsequent research initiatives and the development of additional models, pushing the boundaries of what is possible and expanding the world that AI sees.

languages contributed

independent researchers

countries

models

NEW RELEASE

Introducing Tiny Aya

A compact multilingual AI model family that runs locally on any device. Our 3.35B-parameter base model supports 70+ languages with specialized variants for different regions, delivering strong performance without cloud dependency. Choose from globally balanced or region-specialized models designed for real-world scenarios.

Tiny Aya variants

Other Aya models

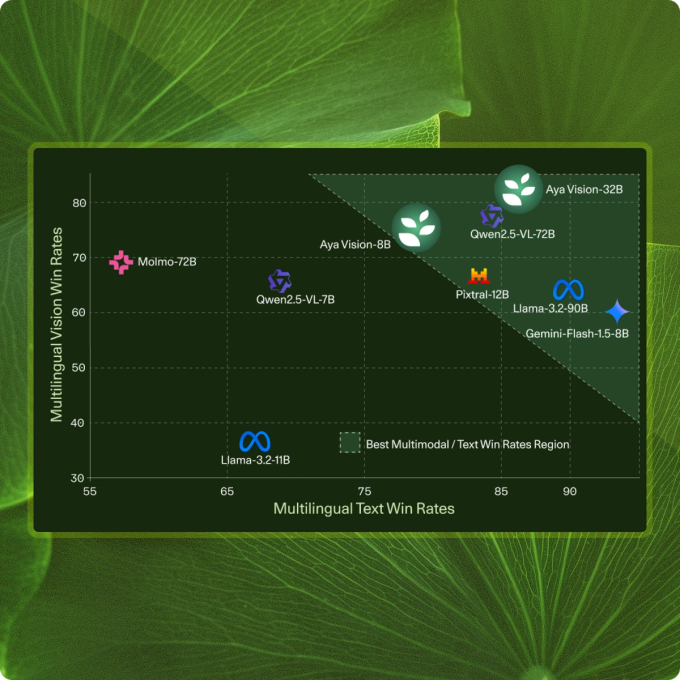

- Aya Vision is a research model advancing in multilingual multimodal AI through innovative synthetic data generation, cross-modal model merging, and a comprehensive benchmark suite. It achieves state-of-the-art performance across 23 languages, surpassing larger models while efficiently addressing data scarcity and catastrophic forgetting by reducing computational overhead up to 40% via optimized training techniques.

- Aya Expanse redefines multilingual AI as a research model mastering 101 languages through innovative instruction tuning and cross-lingual transfer techniques. By combining a curated open-source dataset with compute-efficient pretraining, it achieves unparalleled performance across low- and high-resource languages while reducing infrastructure costs by up to 30%—setting a new benchmark for scalable, inclusive language modeling.

- Aya 101 is a groundbreaking research model delivering instruction-tuned proficiency across 101 languages, developed through a global collaborative effort involving 3,000+ researchers. As the cornerstone of Cohere Labs’ multilingual initiative, it establishes a new standard for inclusive AI by achieving state-of-the-art performance on diverse benchmarks while maintaining efficiency across low- and high-resource languages—all released as an open-source framework to accelerate equitable language technology worldwide.

Under the hood

A step forward for multilingual generative AI

Open collaboration and transparency

Resource-efficient innovation

High quality datasets

Read the papers

- multilingualScholars

Feb 13, 2024

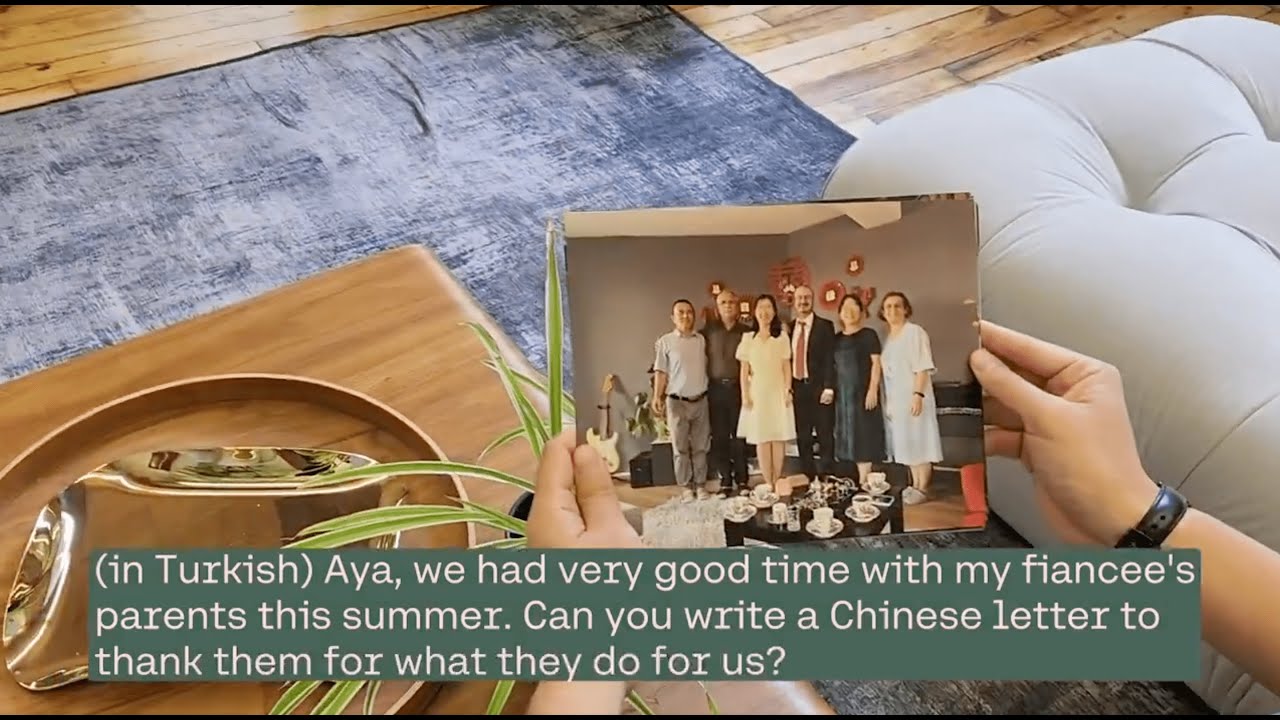

Featured videos

Recognition for Aya

Stanford University HAI

Featured releases of 2024

For “Aya Dataset: An Open-Access Collection for Multilingual Instruction Tuning”

ACL 2024

Best Paper Award

For “Aya Model: An Instruction Finetuned Open-Access Multilingual Language Model”