Shaping the frontier of

ML research

our mission

Changing where, how, and by whom breakthroughs happen

Cohere Labs pushes the boundaries of fundamental ML research while changing who shapes it, bringing diverse perspectives together to build trustworthy, collaborative, and universally capable AI systems.

Cohere Labs at a glance

+

papers published

+

Open Science collaborators

+

global project partners

Collectively advancing the science of AI

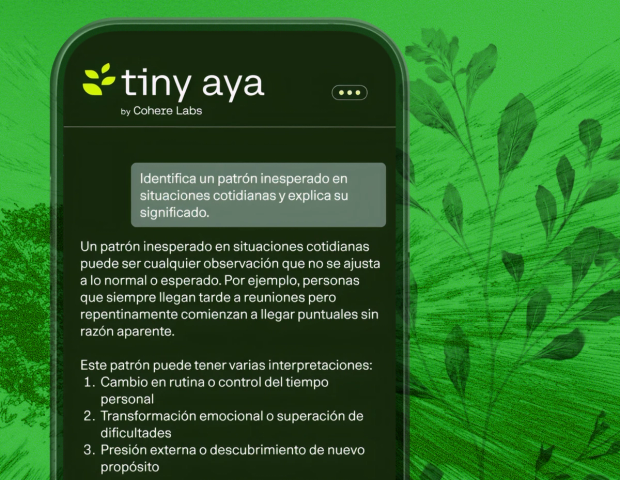

New Release

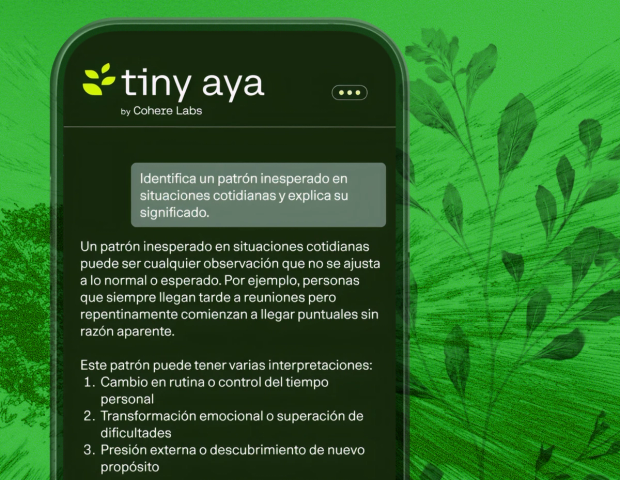

Tiny Aya

Open Science Initiative

Through nurturing a vibrant Open Science Community with 4500 members from 150 countries and releasing all of our research and models to the public, we are empowering the next era of scientific discovery and progress.

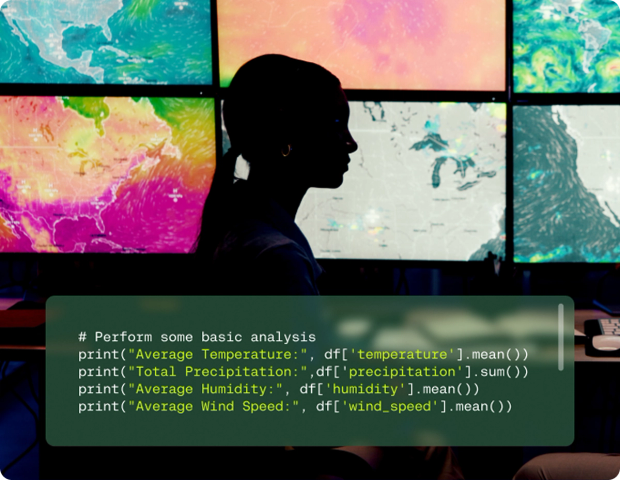

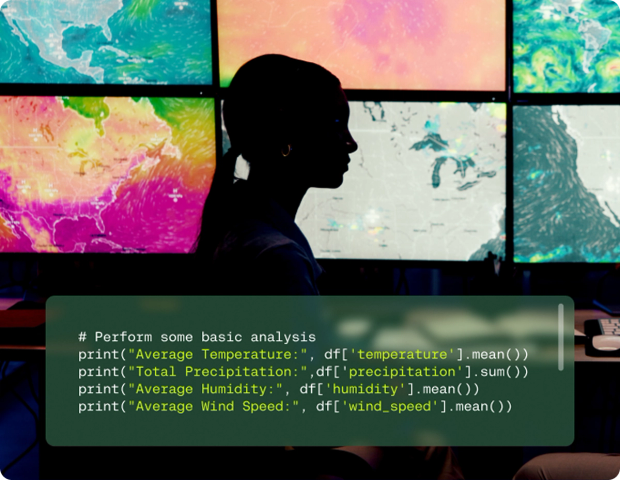

Catalyst Grants

Catalyst Grants provide academic partners, civic institutions and impact focused organizations free access to the Cohere API to pursue projects driving real-world change through artificial intelligence.

Research Scholars Program

The Scholars Program carves pathways for rising researchers to become the next generation of AI leaders through mentorship with our top-tier research team and access to powerful research infrastructure.

Featured models

Aya Models

A global initiative led by Cohere Labs to advance state-of-the-art multilingual AI. From our original Aya Dataset to our latest Tiny Aya models, we bridge gaps between people and cultures across the world.

Learn More

Cohere Labs Hugging Face Space

Explore and interact with our models directly on our Hugging Face Space, making AI research and experimentation accessible to the global community.

Learn More

Upcoming events

Tim Ossowski, PhD candidate

Tim Ossowski - OctoMed: Data Recipes for State-of-the-Art Multimodal Medical Reasoning

Tiny Aya Technical Deep Dive

Members of the Tiny Aya core technical team will get into all the details of our technical innovations, success stories, and lessons learned.

Frequently asked questions

- In 2017, a team of friends, classmates, and engineers started a distributed research collaboration, with a focus on creating a medium for early-career AI enthusiasts to engage with experienced researchers – they called it “for.ai.” Two of those co-founding members, Aidan Gomez and Ivan Zhang, later went on to co-found Cohere, and many of the original members went on to do exciting things (pursuing PhDs, working at industry and academic labs).

At the time, For AI was one of the first community-driven research groups to support independent researchers around the world. In June 2022, For AI was brought back as "Cohere For AI" when we started our journey as a dedicated research lab and community for exploring the unknown, together. We renamed to Cohere Labs in April 2025.

Watch the Cohere Labs history video here. - We do not charge for participating in any of our programs, and are committed to supporting educational outreach programs, which include compute resources and infrastructure needed to participate in machine learning research.

- Our full list of positions are listed here.

- To stay up to date on upcoming talks, sign up for our mailing list.

You can also apply to join our open science community or follow us on LinkedIn and Twitter. - Aya is a state-of-the-art, open source, massively multilingual research LLM covering 101 languages – including more than 50 previously underserved languages. Learn more here.