< Back to modules Luis Serrano

Luis Serrano

What Is Attention in Language Models?

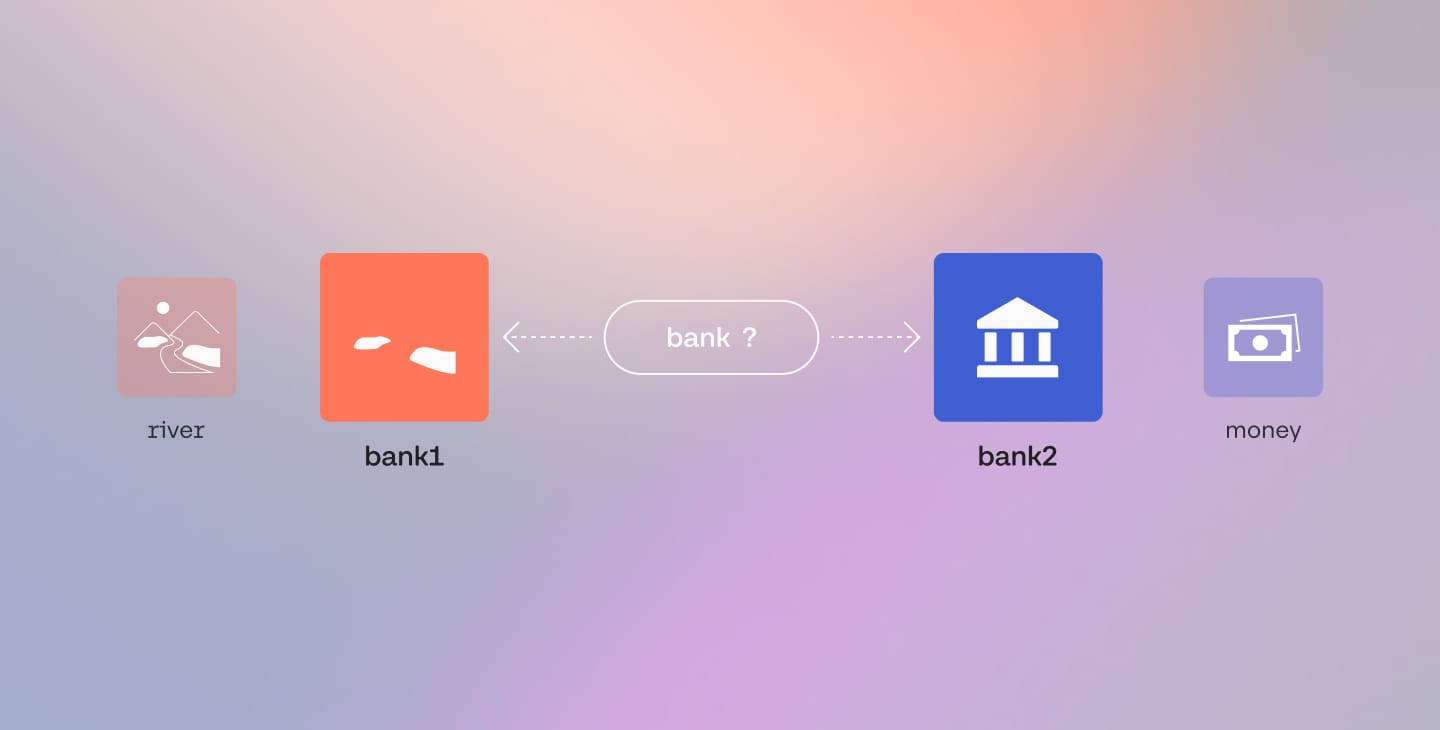

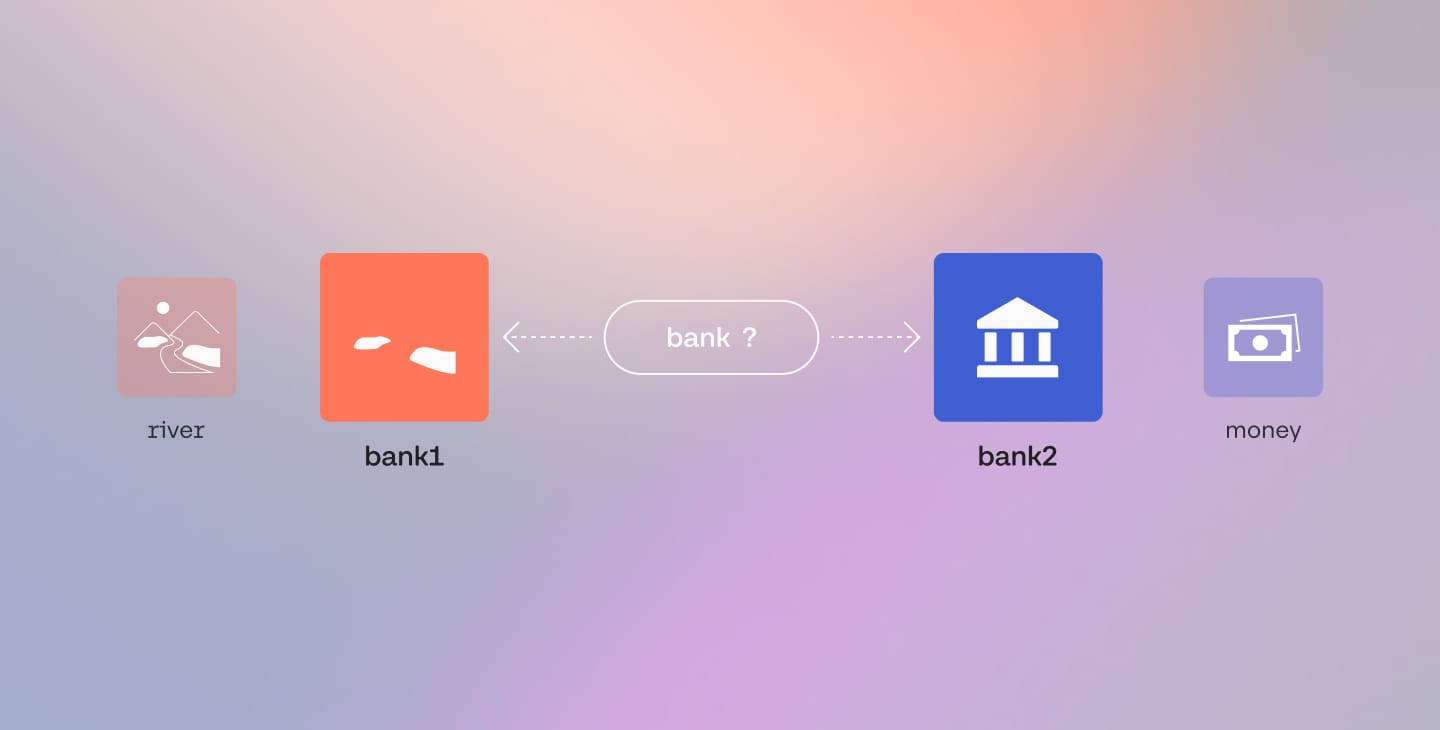

A huge roadblock for language models is when a word can be used in two different contexts. When this problem is encountered, the model needs to use the context of the sentence in order to decipher which meaning of the word to use. This is precisely what self-attention models do.

Share: