LivePerson Innovates Conversational AI in Partnership with Cohere

LivePerson

LivePerson is a global leader in trustworthy and equal AI for business. Hundreds of the world’s leading brands — including HSBC, Chipotle, and Virgin Media — use the company’s Conversational Cloud platform to engage with millions of consumers safely and responsibly. LivePerson powers a billion conversational interactions every month, providing a uniquely rich data set and safety tools to unlock the power of generative AI and large language models for better business outcomes. Fast Company named LivePerson the #1 Most Innovative AI Company in the world.

Overview

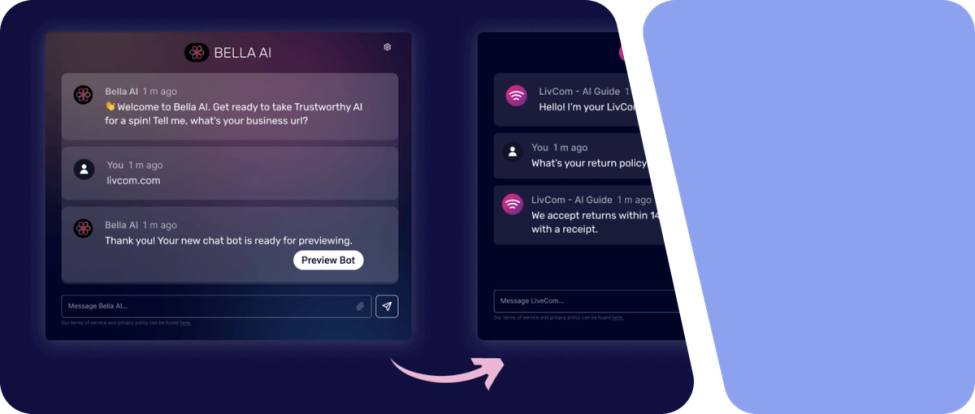

Today’s messaging-based customer support is lightyears away from the web chat experience of the past. Thanks to advancements in generative AI powered by large language models (LLMs), customers can interact with automated systems in a way that feels natural, dynamic, and human. LLMs can also enable systems to go beyond answering questions and take specific actions on behalf of customers.

Since 1995, LivePerson has been at the forefront of this evolution, and in recent years, the company has focused on conversational AI solutions for a range of use cases, including customer support, commerce, sales and marketing, and IT service management. Hundreds of the world’s leading brands use LivePerson’s leading Conversational Cloud platform to engage with millions of customers through safe and sophisticated automated conversations.

As they build the next generation of conversational AI solutions, LivePerson’s engineering team is working with Cohere as a co-innovation partner to help level up the accuracy, quality, and groundedness of the conversational experience.

Looking for a Co-Innovation Partner

As a leader in conversational AI solutions, LivePerson wanted to capitalize on the latest LLM advancements to help the company move faster toward its goal of automating the world’s business conversations at scale.

So, the company began looking to partner with an LLM provider to help them do so. “We wanted a partner that had substantial expertise in the LLM space,” recalls Joe Bradley, Chief Scientist at LivePerson, “and was willing to solve problems with us, so that they, in turn, could learn and grow their own offerings.”

Cohere was at the top of the list due to its industry reputation. “The partnership offered high-quality LLMs that are well respected in the field, along with technical support,” says Bradley. “Cohere was willing to engage with us at a much deeper level than other providers, and experiment with us to learn what strategies work in our context.”

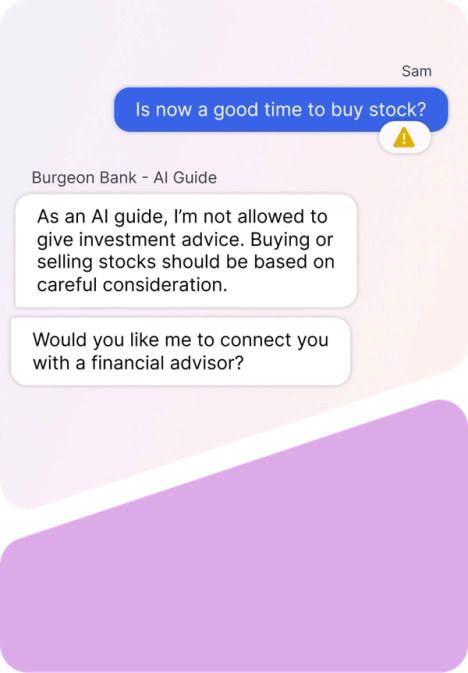

In terms of partnership goals, Bradley and team wanted to learn how to keep LLM-powered conversations grounded, factual, and “on the rails,” generating outputs that match enterprise needs in real-life use cases. They also wanted Cohere’s help with training models to enable systems to take specific actions or complete a task.

Collaborating on Tech Support Conversations

Bradley’s team began working with Cohere to improve LivePerson’s own tech support conversational AI system. He says, “The tech support use case is a very sturdy test for LLMs because we’ve got all this conversational data to draw from, and the system needs to provide useful guidance to people about a complicated product. And the results so far have been impressive. Cohere’s models are standing up really well against the big third-party APIs, and they are tuned by real conversational data for our tech support use case, rather than being generic.”

The team trained Cohere models on LivePerson’s own data, and then tested the output with the company’s human contact center agents. “We can train the models more deeply in concert with Cohere than we can with some of the open APIs,” says Bradley.

When fine-tuning the Cohere Command core model, Bradley’s team followed a “relaxed training” paradigm and interleaved other data into their dataset in order to avoid overfitting on specific tasks. This enabled the model to maintain its general reasoning capabilities. “Cohere allows us to experiment with paradigms like that that give us the best of both worlds,” he says.

The collaboration has also resulted in some feature improvements. For example, when a customer asks the bot a question, it’s important that the bot pulls the right information from the knowledge base. The team worked with Cohere on re-ranking search results from their knowledge base, and then weaving the ranked results into a broader prompt for the LLM to generate an answer to the customer. “It’s not easy to do well, but they did a really nice job. It helped performance and made quite an impact,” says Bradley.

More Use Cases on the Horizon

LivePerson tech support is now using Cohere models in production, and the team has shifted to working with Cohere on several key use cases for banking and e-commerce clients, including automating specific actions during a conversation. These are currently in the experimental phase, and so far it’s going well. “The Cohere models for banking are performing, again, with very high quality,” Bradley says. “They tend to generate more succinct output than other models, which makes them well-suited for task-based conversations. They are more likely to be factually correct and well-grounded without overindulging themselves in their own language.”

LivePerson is also exploring new opportunities for using LLMs in other enterprise AI use cases, such as HR, IT, and industry-specific workflows, for automated customer and employee support. For example, AI assistants can help bank tellers check policy or regulatory requirements, or give sales people quick access to product and service details.

For LivePerson’s customer brands, the impact of LLM-powered conversational solutions is massive. Not only does it result in happier customers and employees, but it also allows brands to automate more workflows, save on operational costs, and optimize resources by focusing human staff on higher-value tasks.

“Cohere’s models are standing up really well against the big third-party APIs, and they are tuned by real conversational data for our tech support use case, rather than being generic.”